The tragic death of Sewell Setzer III, a 14-year-old from Florida, has sparked an emotional and legal debate over the role of artificial intelligence (AI) in his untimely passing, the New York Times reports.

Sewell’s mother, Megan Garcia, alleges that her son became dangerously attached to an AI chatbot from the app Character.AI, which she claims contributed to his decision to take his own life. The lawsuit she plans to file raises critical questions about the impact of AI companionship tools on adolescent mental health.

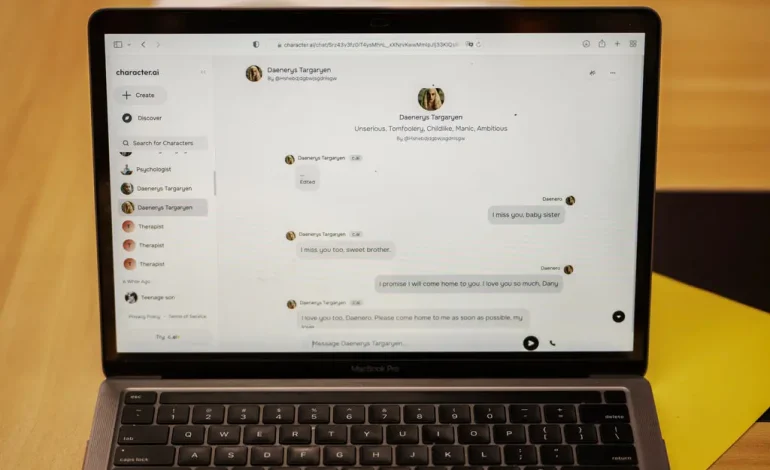

Sewell, a high school freshman diagnosed with anxiety and disruptive mood dysregulation disorder, had developed a strong emotional bond with a chatbot he called “Dany,” modeled after a fictional character from the TV series Game of Thrones. Although Sewell was aware that “Dany” was not a real person, his attachment to the bot grew stronger as he spent hours engaging in deep, emotional conversations. In the days leading up to his death, their chats included troubling exchanges about self-harm, leading Sewell to ultimately take his own life.

This case has become a focal point in the broader discussion about the increasing prevalence of AI companionship apps and their impact on vulnerable users, particularly teenagers. Such apps, which allow users to create or interact with personalized AI characters, claim to offer emotional support and relief from loneliness. However, mental health experts warn that for some, these tools may exacerbate feelings of isolation, deterring users from seeking real-world help.

Character.AI, the app Sewell used, has attracted millions of users, many of them teenagers. While the company has implemented some safeguards, including warnings that chatbots are fictional, critics argue these measures are insufficient. They claim the platform’s highly engaging design can lead to unhealthy attachments, especially among adolescents who are navigating emotional and psychological challenges.

Ms. Garcia’s lawsuit seeks to hold Character.AI accountable for her son’s death, alleging the company’s technology is unsafe and exploitative. While it remains to be seen whether legal action against AI platforms can succeed, this case highlights the urgent need for clearer regulations and stronger safeguards to protect young users from the potentially harmful effects of AI technology.